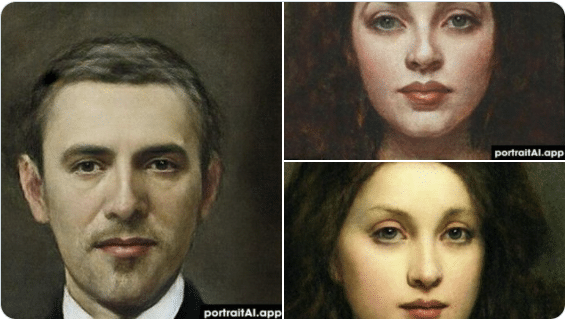

It was a fun idea for using the technology to create content. And the results were amazing – if your skin was white. The challenge the AI had was that most well-known paintings of that era were of white Europeans. So the data used to train the AI algorithm was primarily large volumes of paintings of white-skinned people. You can imagine what happened next… The app turned people of every ethnicity into white Europeans. The AI was doing the job it was trained for, but when processing the selfies of any other ethnic group, the results were abhorrent. This was a narrow AI, with no common sense and a bias in the data that hadn’t been spotted at the time by a human supervisor.

Put biased data in, and you’ll get biased results out.

Tried that portrait AI thing on Obama, Oprah and Laverne Cox. You can’t look at those things and then say AI isn’t biased 😬 pic.twitter.com/2rNMLLwHSh

— Sarah L. Fossheim (they/them) (@liatrisbian) March 8, 2021

Common sense: it’s the least common of all

AIs have no notion of human ‘common sense’. What may feel to you as ‘completely obvious’ may be ‘unimaginable’ for an AI.

Most AIs are ‘narrow AIs’, so…

They perform the narrow tasks they’ve been trained for and don’t have any broader perspective or the wider ethical judgment you’d expect from real people. That’s why AI solutions must have oversight to ensure that they’re ethical: protect privacy, comply with regulations, are culturally appropriate, and minimize bias.

Look out for ‘AI bias’

To avoid bias, the data used for training needs to be representative, and the assumptions made during the development process for the algorithms need to be checked for any prejudice.

Source: https://www.lexalytics.com/lexablog/bias-in-ai-machine-learning